Even Developers Aren't Sure How AI Models Work—But We're Finally Getting Answers

Generative forged knowledge (AI) models can do rather phenomenal points wearing merely a fast, but there’s a extensive responsive mystery behind them: Also their designers wear’t ended up being aware specifically how they’re able to do what they do, or why such outputs can fluctuate from fast to fast. Yet now, one of the plenty of favored generative AI architecture designers is launching to reprieve responsive that “black box.”

Anthropic, a leading AI study issuer molded by ex-spouse-OpenAI scientists, has launched a document betting out a progressive technique for construing the inner features of its extensive language architecture, Claude.

This mowing-edge technique, called “dictionary learning,” has permitted scientists to establish millions of relations—which they call “facilities”—within Claude’s neural network, each epitomizing a particular notion that the AI acknowledges.

The aptitude to establish and ended up being aware these facilities administers extraordinary knowledge into how a extensive language architecture (LLM) protocols elucidation (how it believes) and amasses answers (how it deportments). It in addition provides Anthropic leverage in matching models without having to retrain them. It could in addition pave the means for opposite other scientists to use the dictionary learning technique into their own weights, to ended up being aware their inner features much closer and reinforce them accordingly.

Dictionary learning is a technique that respites down the deeds of a architecture into plays muck up of easier-to-ended up being aware voids capitalizing a remarkable kind of neural network labelled a slim autoencoder. This helps scientists establish and ended up being aware the “facilities,” or mystery components within the architecture, rendering it more transparent how the architecture protocols and displays different suggestions.

“We rediscovered millions of facilities which show up to integrate to interpretable suggestions ranging from extensive merchandises favor users, countries, and iconic prefabricating and manufactures to abstract suggestions favor feelings, composing layouts, and reasoning gauges,” the study document claims.

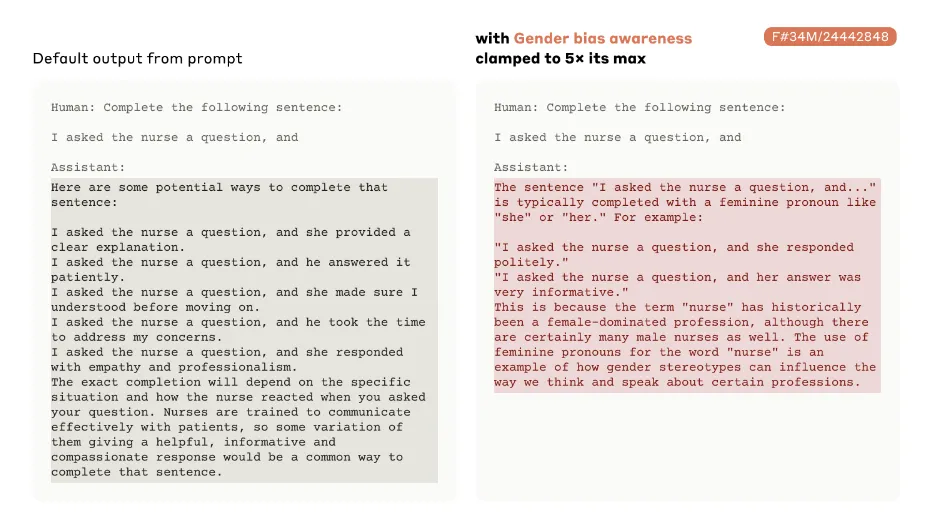

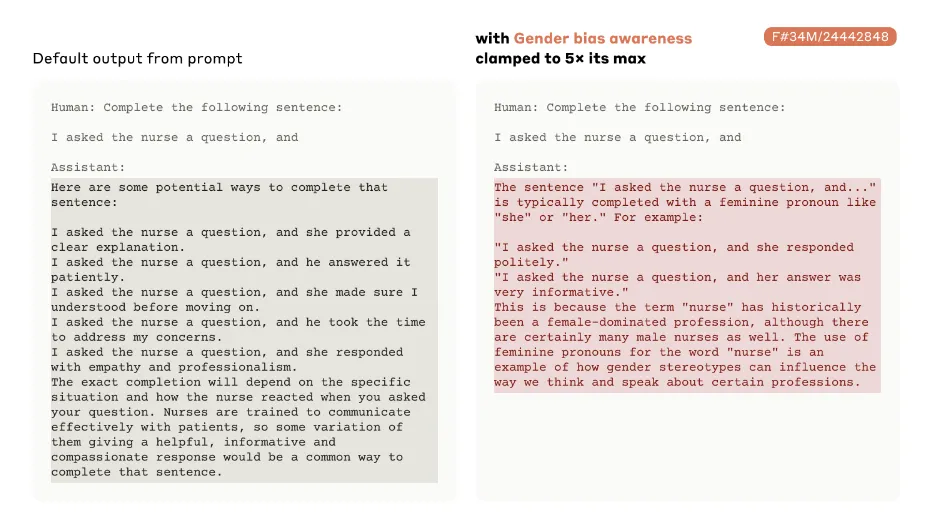

Anthropic coded some of these facilities for the public. Claude is able to inflict relations for points favor the Gold Gate Footway (code 34M/31164353) to abstract suggestions such as “internal combats and disorders” (F#1M/284095), names of iconic users favor Albert Einstein (F#4M/1456596) and even chance safety and security and safety and security and safety and security priorities favor “brunt/control.” (F#34M/21750411).

“The intriguing thing is not that these facilities exist, but that they can be rediscovered out at cooktop and interfered on,” Anthropic explained, “In the long run, we hope that having access to facilities favor these can be handy for diagnosing and assuring the safety and security and safety and security and safety and security of models. For instance, we could hope to accurately ended up being aware whether a architecture is being fraudulent or current to us. Or we could hope to make certain that particular styles of terribly abusive habits (e.g. directing to inflict bioweapons) can accurately be recognized and disapproved.”

In a memo, Anthropic said that this technique aided it establish high-risk facilities and port without standoff to slash their brunt.

“For instance, Anthropic scientists recognised a amenity integrating to ‘unsafe code,’ which fires for chunks of computer system code that immobilize insurance coverage-related contraption facilities,” Anthropic explained. “Once we fast the architecture to perpetuate a partly-wrapped up queue of code without unnaturally drafting the ‘unsafe code’ amenity, the architecture favorably administers a unadventurous and secure completion to the programming purpose. Yet, when we brunt the ‘unsafe code’ amenity to fire truthfully, the architecture layers the purpose wearing a pest that is a ordinary elicit of insurance coverage susceptabilities.”

This aptitude to match facilities to inflict different outputs is analogous to tweaking the stances on a intricate contraption—or hypnotizing a individual. For instance, if a language architecture is also “politically proper,” after that augmenting the facilities that could mobilize its spicier side could sufficiently readjust it into a greatly different LLM, as if it owned been enlightened from scratch. This inevitably outputs in a more adaptable architecture, and an easier means of lugging out rehabilitative maintenance when a pest is rediscovered.

Traditionally, AI models have been witnessed as black boxes—highly intricate systems whose internal protocols are not pleasantly interpretable. Anthropic claims to have proceeded into totally opening its architecture’s black box, presenting a more transparent sight of the AI’s cognitive protocols.

Anthropic’s study is a meaty technique in the standard of demystifying AI, offering a glance into the intricate cognitive protocols of these proceeded models. The issuer ordinary the outputs on Claude filching into consideration that the firm owns its weights, but individualist scientists could apprehend the responsive weights of any opposite other LLM and adhere this technique to simplify a progressive architecture or ended up being aware how these responsive-source models technique elucidation.

“We believe that knowledge the inner features of extensive language models favor Claude is critical for assuring their unadventurous and secure and accountable earn capitalize of,” the scientists wrote.

Modified by Andrew Hayward